Princeton computer scientists are trying to bring virtual reality into the real world, and potentially improve a range of experiences, from remote collaboration and education to entertainment and video games.

Virtual and augmented reality technology will one day be ubiquitous, according to Parastoo Abtahi, assistant professor of computer science. It will be crucial that users of such technology can interact with the real world seamlessly.

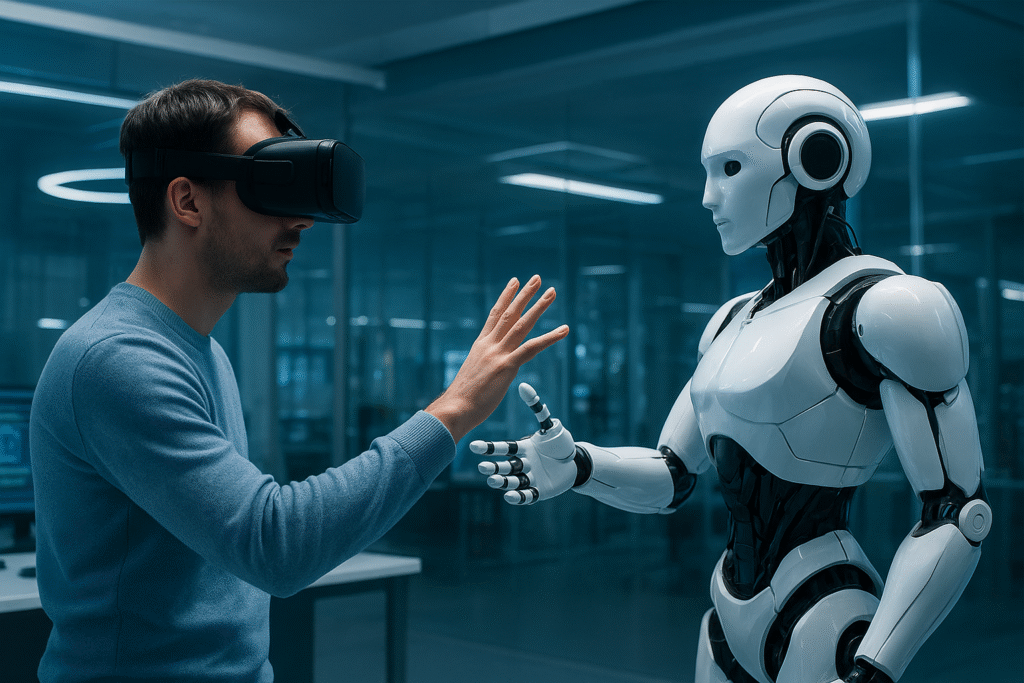

Abtahi and postdoctoral research scientist Mohamed Kari are making this a reality by combining virtual reality technology with a real robot that can be manipulated by the user. Their study will be presented later this month at the ACM Symposium on User Interface Software and Technology in Busan, Korea.

A person operating this system may, with a headset of mixed reality, choose an item from a list and then, in virtual, position it on the table in front of them. Or, for that matter, command an animated bee to bring a bag of crisps to them on the sofa.

The drink and the chips may at first exist only as pixels. But soon enough, after about a minute, they will exist in the physical world, as if through magic. But it’s not magic—only a robot, made invisible to the user, that has dropped off the snack. “Visually, it feels instantaneous,” said Abtahi.

By stripping “all unnecessary technical details, even the robot itself,” as described by Kari, the experience seems seamless. The intention is to make the technology lose its visibility and allow the interaction between human and computer to be intuitive.

One of the primary technical difficulties in this system is communication. The user needs to be able to tell the computer what they want easily—pointing at a pen on the other side of the room, for instance, and moving it over to the table in front of them. Kari and Abtahi developed an interaction method in which one simple hand movement will choose an object, even from a distance.

These movements are then converted into instructions that the robot is programmed to follow. The robot itself has its own mixed reality headset, therefore, it knows where to position objects in the virtual world.

The other technical problem is removing and inserting physical objects from the user’s view. Abtahi and Kari use a technology known as 3D Gaussian splatting to render a realistic digital replica of the physical environment. After scanning everything in the room, it enables the system to remove something from the view, such as a passing robot, or insert something, such as an animated bee.

To do this, a digital scan and rendering must be done of every inch of the room and every object in it. Currently, the process is a bit laborious, Abtahi said. Automating it, perhaps by having a robot do it, is something her lab will research in the future.